In today’s rapidly changing technological landscape, businesses face many challenges that require innovative solutions. Oftentimes, these solutions are related to expensive computational problems and need high-performance resources that perform optimization, simulation or machine learning tasks. Next Generation Computing (NGC) technologies emerge as new opportunities to solve such problems better, faster or more efficiently. For businesses the crucial challenge is to be prepared when the technology is ready.

Among the novel NGC technologies are, for example, Neuromorphic and Quantum Computing (NC, QC; Infobox 1 & 2). Additionally, variations and extensions of classical computing paradigms such as Confidential or High-Performance Computing (HPC) are considered NGC.

Infobox 1: Neuromorphic Computing (NC)

NC is a computing paradigm, that mimics how the human brain interacts with the world, to deliver energy efficient human-like cognition. For that, neuromorphic chips learn patterns with spiking neural networks, which, in contrast to standard neural networks, are asynchronous and event based. With that, NC is capable to achieve significant power-efficiency which is very relevant for edge computing [1].

Infobox 2: Quantum Computing (QC)

QC is built upon quantum mechanics and works with quantum bits (qubits). Qubits can exist in multiple states simultaneously (superposition) and can be entangled, a special kind of non-local qubit connection. These properties enable QC to be exponentially faster than classical computers for certain problems. How to realize those promises with quantum algorithms and scale QC hardware for industry-relevant applications is part of current research. Today’s quantum technology is not yet ready to compete against classical approaches but is expected to deliver great benefits [2].

These new paradigms will have an unprecedented impact on a multitude of businesses. QC, for example, is expected to break important cyber security mechanisms in the future. Organizations must implement post-quantum cryptography [3] in their business, likely in the next 5-10 years to ensure data security. A sustainable way to tackle this problem is to implement a Crypto Agility approach which aims to enable organizations via technical and processual means to rapidly adapt to new cryptographic protocols when needed. Although this is not directly an NGC topic, first discussions are often initiated by an NGC expert team due to relation to QC.

Achieving a basic level of NGC readiness is therefore important for players in all industries and could become essential for many. In contrast to other new topics in IT, NGC is not restricted to software but is heavily intertwined with highly specialized hardware. Combined with a scarce talent pool, the focus on research and development in the field, and the complex and multidisciplinary nature of these technologies, existing approaches to build a company structure around them become infeasible. To be prepared for a potential disruption in the future, a complex combination of talent, technology and planning, as well as a tailored method for strategic positioning is needed.

First movers that explore NGC already in its infancy will have an advantage when it reaches maturity, gaining a head start in applying it profitably. However, companies oftentimes do not have the resources, time or skills to become a first mover and might fear to fall behind. Nonetheless, this disadvantage will be negligible upon broader market adoption of the NGC technologies (e.g., as SaaS)—provided that the company is NGC ready. This does not necessarily require fully fledged research or having entire business units dedicated to these topics. Rather, it means understanding which benefits can be gained from using NGC, how it can be applied to specific business problems (e.g., by examining small scale proof-of-concept (PoC) solutions to business problems) and knowing where to look for implementation support (e.g., internal expert groups, external help or collaboration). Such exploration projects can realistically be completed in about three to six months.

This article focuses on the importance of positioning an organization strategically for NGC to ensure the greatest possible benefit from these innovative technologies. This includes strategies for building up and positioning an NGC team, identifying high-value business use cases and evaluating their potential. While this approach can be applied to all NGC paradigms, the focus of this article lies specifically on QC. Its extensive range of possible applications, thriving ecosystem, industry investments and the promise of exceptional computational power make it a prime exemplification. On the technical side important points to consider in designing quantum formulations of the associated computational problems are reviewed. Furthermore, the use of modular software architectures which enable rapid PoC development are discussed.

Strategic Placement for NGC Readiness

The following section will go through the essential strategic decisions and required actions for starting the NGC journey of a company: choosing a suitable focus, finding the right talent, establishing a team and participating in the ecosystem are some of the key points to consider.

Strategic Orientation

Depending on the overall strategy of an organization, a clear focus for developing the capability to use NGC should be chosen and adhered to. There are two main differentiations for this: the scalability and integration focus and the tech exploration focus.

The scalability and integration focus prioritizes bringing tangible benefits to the company. For that, mainly technologies that are already in scale to compete with classical computation today or in the immediate future are in scope. The goal is to find urgent problems whose solution is limited because of currently available computational power. If a company chooses this focus, a strong collaboration between business units and IT department is needed, with the team working on improving an existing ansatz or using new technologies to increase the computational power with different hardware. Example technologies that usually fit here are HPC and edge computing.

In contrast, the tech exploration focus concentrates on the NGC technology itself and not on the immediate and direct impact of it on the business. This means that the choice of use cases has a different goal. Namely, how the technology can be explored with fitting underlying problems. The technologies that are investigated with these use cases do not need to be competing with classical computational solutions yet but should have the potential to compete with them in the mid- to long-term future. QC is one of the technologies that has a disruptive potential but is not yet competitive with classical computation. Hence, this focus is usually the right choice for QC.

Profiles and Team Setup

The strategic usage of NGC requires a specialized skillset combined with a clear vision and structured requirements list. This skillset does not only include IT, mathematical and physics abilities but also business analyst skills to properly estimate potential business challenges and impact. Analytical problem-solving and creative thinking is essential to create new applications with NGC. Also, due to the highly inter-disciplinary nature of NGC, a team with diverse backgrounds in multiple scientific fields helps the efficient advancement of the technologies. To attract people with this rare and specialized skillset, companies need a tailored strategy. For creating this strategy or finding people with a certain skillset, external help can be very useful.

As an example, for QC it is very helpful to have a solid understanding of quantum mechanics, quantum information theory and computer science. On top of that, proficiency in (quantum) software development and quantum circuit design will be invaluable when designing quantum software solutions. The team should have a strong command of programming languages (mainly Python) and hands-on experience with open-source quantum frameworks like Qiskit or PennyLane. QC intersects with various fields: cryptography, materials science, drug discovery, finance and more. Acquiring domain-specific knowledge is essential for applying quantum principles to real-world problems.

Team Establishment

After setting up an NGC team, it needs to be positioned as the single point of contact for innovative topics and be promoted within the company’s available channels. This standing of an expert (group) helps to find use cases and the right resources to become NGC ready. Ways to achieve this can be:

- Presentations of NGC and the team in company community calls

- Newsletter with interesting recent developments to group of interest (e.g., presentation of new QC hardware or algorithms)

- Global newsletter with high-level overviews of NGC topics (e.g., news related to the company’s industry)

- Internal workshops to educate coworkers and executives on NGC

- Communication of achieved key performance indicators (KPIs)

Definition of KPIs

Even though most NGC technologies are still immature, the expert group needs to have a good standing in the company: combined with the tools that were already touched upon, the definition and completion of clear and measurable KPIs will help to achieve this. The realization and dimension of the KPIs are individually connected to the focus and goals of the company. Setting them up is especially challenging when it comes to novel technologies but will show that the group can already have an impact, independent of the specific focus.

General KPIs can be the number of identified and implemented use cases as PoCs or the number of conducted internal workshops. If a company chooses the scalability and integration focus, KPIs can be the number of engagements with different business units. For the tech exploration focus, KPIs can include the number of attended conferences, explored technologies, or published scientific or business papers.

Ecosystem and Collaboration

The ecosystem of NGC has grown rapidly in the last years and will continue to do so in the future. Universities, small businesses, but also global players are working together in consortia and collaborations to foster the development of NGC hardware and software and to shape the evolution of the field in their interest.

Collaboration can have a big impact on the development of NGC readiness in participating organizations: ideas can be shared and shaped, best practices communicated and lastly forces may be joined on solving (joint) use cases. Especially in times of shortage of skilled personnel, all involved organizations benefit from a collaboration, particularly in the early era of NGC, where practical usage lies still in the future and (almost) no competitive edge can be gained by exploring the technologies.

For example, a few consortia that play a role in the European NGC ecosystem are:

- PlanQK [4], a consortium funded by the German government focused on the intersection of QC and artificial intelligence. It aims to build a QC platform to strengthen Germany’s market position and provide German companies with support and services related to QC. A wide variety of industry and academic partners are participating within PlanQK.

- QuIC [5], a consortium that focuses on the development of quantum technology in Europe. For this, it guides decisions of European institutions, organizes international events and provides a platform for collaboration, e.g., via working groups.

- Bitkom [6], which has a working group focused on providing an exchange forum for the German industry in HPC and QC. It aims to support decision-makers, awake interest for the technologies, set up strategic roadmaps and cooperate with other organizations.

Of course, whether a particular consortium will help an organization with its NGC strategy depends on the chosen focus, individual goals and available resources.

Business Use Case Selection

Selecting viable business use cases to examine is the next step after setting up a team and strategy. The most important criteria for this will be discussed with QC as an example. However, for other NGC technologies the described procedure applies in similarly, especially if it is suited for the technology exploration focus.

To distill potentially disrupted quantum use cases from the many problems businesses encounter every day, the quantum viability of a use case must be the basis of the selection. Then, it is essential to prioritize those use cases with a high economic value. By focusing on these, companies can drive significant returns on investment. Of course, the intersection of high value and viable use cases might be small [7].

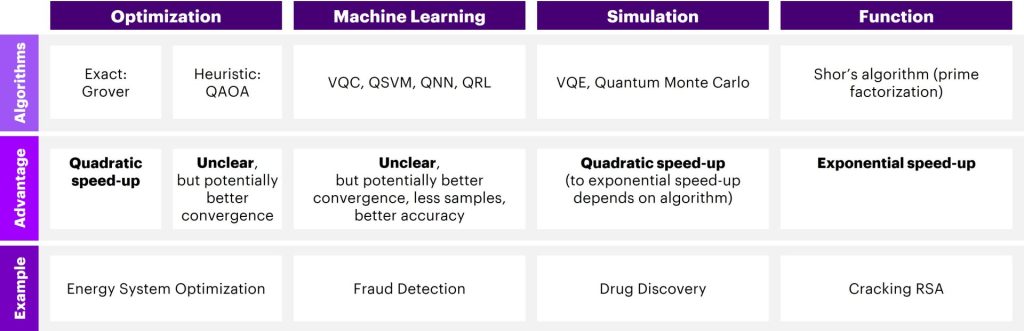

QC is naturally suited for quantum (chemical) simulations [8] but can also be used for optimization or machine learning (see Fig. 1 for a high-level overview). However, due to today’s restricted hardware, one cannot expect a quantum advantage over a state-of-the-art classical method soon, at least not for any sensible industry-relevant problem. Realistically, it is also unclear whether a quantum algorithm with a sub-cubic speed-up compared to the best classical algorithms will be viable in the next two decades at all. The reason for this lies (among other things) in the noise and slower execution time compared to classical computers [10]. This means that the quantum solution of a selected use case should have at least a cubic, ideally a super-polynomial or exponential speed-up (Infobox 3). On top of that, if heuristic quantum algorithms hold promise in practical terms, they should be considered even if they do not have a proven theoretical speed-up.

Fig. 1: Overview of the main applications for quantum computing, with a selection of algorithms, possible advantages and example use cases (for more details see [9]).

Infobox 3: Complexity Theory Overview [7]

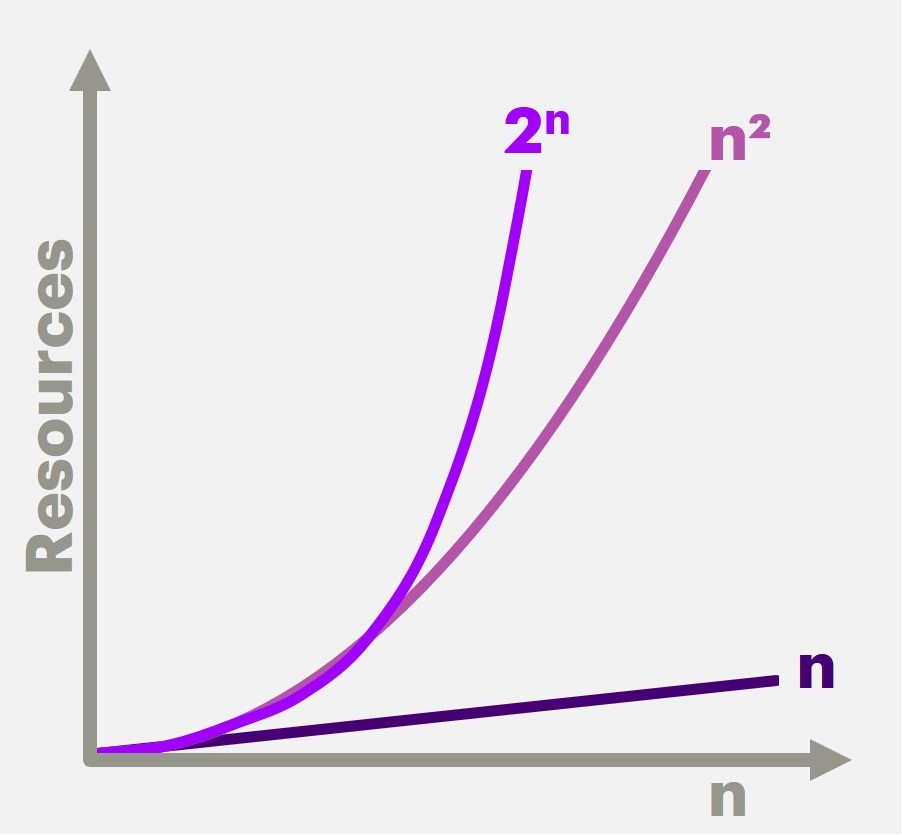

Complexity theory studies resource scaling of algorithms for solving computational problems. The problems can be classified based on their inherent difficulty (i.e., increase in solution time based on input size). A speedup refers to the improvement in the runtime or efficiency of an algorithm.

The most prominent types of complexities are linear, polynomial and exponential: if an input of size n is given, the problem difficulty scales like n, nm or mn, respectively (see Fig. 2).

If an algorithm provides a polynomial speed-up over another it means that it decreases the complexity by a polynomial factor, e.g., n² → n or 2n → 2(n/2). More interesting is the exponential speed-up, where the complexity decreases by an exponential factor, e.g., 2n → n² or n → log(n).

Fig. 2: Examples of exponential (2n), polynomial (here: quadratic, n²) and linear (n) scaling.

To easily evaluate such use cases, one can utilize a use case canvas that—with some pre-defined questions—gives an indication if the evaluated use case is suited for quantum advantages. Or, if not, whether other NGC technologies might be worth considering.

Evaluating quantum implementations of high-value business use cases is important. QC might not make sense at scale currently, but having examined the use cases already, will quick-start future development and provide a setup for potential quantum benefits later. Additionally, looking at an existing problem in more detail for a quantum evaluation, may reveal possibilities to improve existing solutions.

Use Case Journey

Fig. 3: End-to-end quantum journey from strategic setup to quantum readiness. A special focus is set on the steps of the quantum use case journey.

After the selection of a specific use case, the use case journey can begin. Generally, it follows a more or less fixed path (see also Fig. 3), consisting of the following steps [7]:

- Definition of the business problem

- Formulation of a computational problem based on the business problem

- Transformation to a quantum-suitable problem

- Solution of this problem

- Evaluation, benchmarking and comparison

It is important to optimize this workflow and to identify decision points, where further development of the use case should be critically evaluated and might be stopped or postponed.

The first step of the quantum use case journey is to precisely define a business problem. For this, all required data needs to be collected and prepared. Statistical analysis and data preprocessing should be conducted.

Computational Problem Formulation

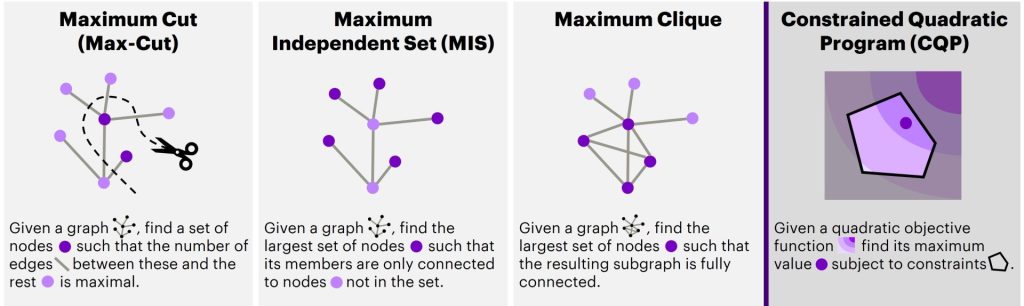

After clearly defining the business problem, the next and one of the most crucial steps is to formulate a computational problem from it. This is the basis for all further steps and determines feasible algorithms and with that the hardware and software that may be used for the solution. Focusing on the general problem type of optimization, common problems that arise for a multitude of use cases are, e.g., the NP-hard Max-Cut optimization or more generally the Constrained Quadratic Program (see Fig. 4) [7].

Fig. 4: Explanations of Max-Cut, MIS and Maximum Clique, and CQP as a possible general representation of these problems.

Before seeking a solution to the computational problem, an analysis whether quantum solutions can have an advantage compared to classical solutions is needed. This is the first decision point. If there is the possibility for an advantage in the future, the use case journey can continue, otherwise it should end here. An exception can be made if the tech exploration focus is selected and the decision is backed up with valid arguments (e.g., availability of good data).

Quantum-suitable Problem Formulation

After a mathematically precise version of the business problem has been formulated, it needs to be brought into a form suitable for QC. However, only a few of the common computational problems can be mapped to qubits directly. Therefore, these problems usually must be transformed or reduced. There might be multiple ways to do this, one prominent encoding is the Quadratic Unconstrained Binary Optimization (QUBO) [11], whose goal it is to minimize a quadratic objective built from binary variables without any constraints. This type of problem can be directly translated into a quantum system.

It is important to keep in mind that the necessary transformation depends on the underlying hardware specifications: a neutral atom quantum computer (in analogue mode) with qubits arranged on a 2D grid might need the problem formulated as an instance of Maximum Independent Set (MIS) on a unit disk graph [12]. A photonic quantum computer using Gaussian Boson Sampling (GBS) would need the problem formulated as a graph-based problem, like e.g., Maximum Clique [13] (see Fig. 4).

Usually, naive transformations increase the problem size to an extent not feasible anymore for current quantum devices. Therefore, finding a clever formulation of the problem is almost as important as selecting the use case in the first place.

For this reason, before encoding the computational problem, it must be simplified by reducing instance size, developing smart variable encodings and splitting it into multiple parts. If needed, the problem might also be approximated to trade accuracy for smaller size. Related impacts on the solution must be evaluated in a later step.

Also, highly constrained problems might not be feasible. E.g., in QUBO formulations constraints are often encoded via penalties [14]. Apart from the difficulty to choose the right penalty factors, a high number of penalty terms will also lead to a more complex cost landscape of the QUBO objective. This makes it hard for standard approaches like the Quantum Approximate Optimization Algorithm [15] to find the optimal solution. It is therefore advisable to reduce the number of constraints in a problem that should be formulated as a QUBO as much as possible. This may be done by encoding some constraints directly into the binary variables. Furthermore, methods and techniques from mathematical optimization and operations research may be used for problem size and constraint reduction. These include Lagrangian relaxation, (Quantum) Benders decomposition [16] or slack variable optimization [17].

The preservation of the essential features allows for good results on QC hardware even with current limitations. Getting the formulation right, can make the difference between being able to run the problem on hardware or not, waiting days or minutes for simulations to finish, or having a quantum advantage or not.

Solving the Business Problem

With a quantum-suitable problem ready to go, the next step is to solve it using quantum algorithms. Firstly, quantum simulators should be used to confirm that the algorithm works as expected and to save on hardware costs. Afterwards, real quantum hardware can be used to execute the algorithm.

Specifically in the current era of QC, the comparison and analysis of different algorithms and hardware architectures is very important. Different types of quantum hardware offer different advantages and disadvantages (for a review see, Ch. 5 of [18]):

- Quantum computers based on superconducting qubits provide good scaling properties [19]. As such, useful results with more than 100 qubits have been showcased [20]. Current systems provide mainly nearest-neighbor connectivity, limiting the natively runnable quantum circuits.

- Trapped ion–based quantum computers promise long coherence times, allowing many operations [21]. However, compared to other architectures the time for individual operations is longer. General advantages are low readout errors and the possibility of all-to-all connectivity simplifying quantum circuit design. State-of-the-art trapped ion processors reach up to 32 qubits [22].

- Other architectures using photons, neutral atoms, semiconductors and more all bring their own benefits and problems and should be evaluated in more detail.

Of course, the quantum solution must be benchmarked against state-of-the-art classical approaches in terms of run time, performance, accuracy and possible other problem-specific metrics. It is important to note here, that the classical solvers should not be applied to the problem in its quantum formulation, but to the original (usually more precise) formulation. This ensures that the comparisons are not skewed in favor of the quantum solvers.

There are some methods that might improve the quality of the quantum solutions. Circuit cutting or knitting [23] can reduce the size of quantum circuits by splitting them into many smaller circuits (depending on the structure of the original circuit, the number of cut circuits could become infeasible), which might enable the solution of larger problem instances. Additionally, quantum error mitigation techniques like zero-noise extrapolation [24] or readout error mitigation can improve the solutions in exchange for longer run times and execution overhead [25].

At the end, the solutions and results need to be transformed into a business outcome which needs to be evaluated from a business point of view. This evaluation results in the final decision point. If the solution is valuable and could potentially be implemented in the business, the use case development is continued. A transfer to production then involves other challenges that are not in scope of this article.

Accelerating Use Case Development

While going through the steps of the quantum use case journey, the problem formulations, solutions with different approaches, visualizations and subsequent application of the solutions to the business problem should be implemented as a PoC application. This provides a good showcase of the use case with comparatively small effort. There are many relevant problems and a growing number of different QC approaches and hardware backends. Thus, a fast PoC development process that encompasses numerous of them as well as comparisons with classical methods is desirable. In this way a company can try out several providers and find its optimal setup.

To achieve such a fast development process, the use case–specific business logic and the backend logic should be split and modularized as much as possible (loose coupling). There are multiple advantages to this modularity: existing problem encodings, common mathematical problem formulations and algorithms can be reused without depending on specific use cases. Already implemented backend adapters can be harnessed and new hardware can be integrated seamlessly by using a unified API. On top of that, the conversion between typical computational problems can be automated.

To quick start a PoC project templates should be used. These may provide a pre-defined project structure with development best practices, containerization for cloud deployment or API prototypes.

These points speed up the PoC development significantly and simplify the integration of solution analytics and comparisons to classical algorithms. For further accelerating the development and evaluation—especially for the execution of simulators and benchmarking—cloud computing, HPC instances, GPU simulators and parallelization should be considered.

The same modularization and generalization can be done for the business problem evaluation, too. Using single page use case templates with all relevant business information and first technical details to determine the QC viability is very helpful. Furthermore, suitable quantum algorithms, as well as hardware and software choices can be documented. With these templates, the NGC team has all information and details of all gathered use cases structured at hand.

A rapid and highly modular development process increases the speed in which different use cases can be evaluated. This helps in growing a portfolio of quantum applications for a potential future quantum disruption.

Conclusion

This article explored strategic and technical steps necessary to prepare organizations to be ready for an NGC disruption in the future. One of the major candidates for such a disruption, namely QC, was considered in more detail. After laying down the strategic orientation, setting up and positioning an NGC team with clear KPIs and profile roles is the first part of this process. Additionally, ways for collaboration and concrete measurement of progress were discussed.

Following this, the next steps of the quantum journey were outlined: exploration of company use cases suitable for QC, use case selection criteria and finally the full quantum use case journey from business problem formulation to benchmarking of its quantum solutions. Part of this is the careful optimization of computational and quantum-suitable problem formulations, which is crucial for the actual application of QC. Lastly, tools for accelerating the PoC development process and rapidly increasing the pace of use case evaluations have been discussed.

Development of NGC technology is accelerating and will lead to disruptions of many industries. It is crucial for players in these industries to be prepared adequately for the coming impacts by evaluating technologies such as QC early on and building up competence and experience. Having a portfolio of quantum and next-gen applications to pressing business problems ready facilitates the inevitable step of scaling when the technology is mature. This feat might seem challenging but can be implemented with the advice of this article, collaborations and external help.

References

[1] C.D. Schuman et al., Opportunities for neuromorphic computing algorithms and applications. Nat. Comput. Sci., 2022

[2] S.S. Gill et al., Quantum computing: A taxonomy, systematic review and future directions. Softw. Pract. Exp., 2022

[3] D.J. Bernstein and T. Lange, Post-quantum cryptography. Nature, 2017

[4] PlanQK – Platform and Ecosystem for Quantum-inspired Artificial Intelligence. https://planqk.de/en/ (2023)

[5] Homepage – QuIC. https://www.euroquic.org/ (2023)

[6] Gremium High Performance Computing & Quantum Computing | Bitkom e. V. https://www.bitkom.org/Bitkom/Organisation/Gremien/High-Performance-Computing-Quantum-Computing.html (2023)

[7] S. Senge et al., Quantum Incubation Journey: Theory Founded Use Case and Technology Selection. Digit. Welt, 2021

[8] A.J. Daley et al., Practical quantum advantage in quantum simulation. Nature, 2022

[9] K. Bharti et al., Noisy intermediate-scale quantum algorithms. Rev. Mod. Phys., 2022

[10] T. Hoefler et al., Disentangling Hype from Practicality: On Realistically Achieving Quantum Advantage. Commun. ACM, 2023

[11] F. Glover et al., Quantum bridge analytics I: a tutorial on formulating and using QUBO models. Ann. Oper. Res., 2022

[12] M.-T. Nguyen et al., Quantum Optimization with Arbitrary Connectivity Using Rydberg Atom Arrays. PRX Quantum, 2023

[13] T.R. Bromley et al., Applications of near-term photonic quantum computers: software and algorithms. Quantum Sci. Technol., 2020

[14] M. Ayodele, Penalty Weights in QUBO Formulations: Permutation Problems. Evolutionary Computation in Combinatorial Optimization, 2022

[15] E. Farhi et al., A Quantum Approximate Optimization Algorithm. arXiv, 2014

[16] Z. Zhao et al., Hybrid Quantum Benders’ Decomposition For Mixed-integer Linear Programming. arXiv, 2021

[17] A. Verma and M. Lewis, Variable Reduction For Quadratic Unconstrained Binary Optimization. arXiv, 2021

[18] National Academies of Sciences, Engineering, and Medicine, Quantum Computing: Progress and Prospects. 2019

[19] M. Kjaergaard et al., Superconducting Qubits: Current State of Play. Annu. Rev. Condens. Matter Phys., 2020

[20] Y. Kim et al., Evidence for the utility of quantum computing before fault tolerance. Nature, 2023

[21] C.D. Bruzewicz et al., Trapped-ion quantum computing: Progress and challenges. Appl. Phys. Rev., 2019

[22] S.A. Moses et al., A Race Track Trapped-Ion Quantum Processor. arXiv, 2023

[23] C. Piveteau and D. Sutter, Circuit knitting with classical communication. arXiv, 2022

[24] K. Temme et al., Error Mitigation for Short-Depth Quantum Circuits. Phys. Rev. Lett., 2017

[25] Z. Cai et al., Quantum Error Mitigation. arXiv, 2022

Um einen Kommentar zu hinterlassen müssen sie Autor sein, oder mit Ihrem LinkedIn Account eingeloggt sein.